Sydney SEOs Complete Guide to Technical SEO

Technical SEO refers to all the aspects of your website outside of content, sometimes being referred to as off page SEO. This includes infrastructure, security code minimisation and speed. The aim is to enhance all aspects of your website’s infrastructure and optimizes it for better search engine visibility. This not only ensures search engines can crawl and index your site efficiently, it also shows crawlers that it is a professional site, hosted in a professional manner. In this reference guide, will cover key aspects like site speed, mobile usability, and advanced techniques to improve your site’s performance.

Technical SEO Key Takeaways

- Technical SEO is crucial for enhancing website performance and user experience and by doing so increasing user experience and search engine crawler visibility.

- Key components include: site speed, mobile usability, and secure connections (HTTPS), file size reduction and the limiting of complex code, all of which significantly impact search rankings and user satisfaction.

- Regular audits, advanced techniques, like log file analysis, page speed sites and structured data implementation, help maintain and optimize your site’s technical SEO over time.

Understanding Technical SEO

Technical SEO is the core building block of of search engine optimization. It acts as as the framework for content discovery, user experience and trust. Primarily it is is to optimize your website’s infrastructure, making visitor interactions, quick, efficient and enjoyable all whilst providing search engines easy to access, read and understand your content. Think of technical SEO as the foundations of your home, without a strong base, everything built on top is at risk and may as well not exist. Having this underlying technical infrastructure perfect, is critical to have search engines crawl and index your site efficiently, which in turn guarantees your content to perform well in search results.

Learning about all the aspects of technical SEO might seem daunting at first, but with a systematic approach, anyone can master it. The learning curve involves focusing on two primary underlying fundamentals - site structure and page speed. As you practice and implement our strategies, you’ll find that the complexities become more manageable and easier to understand.

Of course as mentioned, the role of technical SEO extends beyond search engines and other search engines; it also enhances user experience by removing friction, risk, and ambiguity. A well-optimized site not only ranks better but also provides a seamless page seo experience for visitors. By implementing some of these ideas and strategies, you will soon discover what damage plugins, themes and addons have to your standard website and how these disrupt a good user experience and of course effect your standings. Preserving link equity and maintaining your site’s authority and relevance. Ultimately increasing your organic traffic.

In today’s competitive digital landscape, understanding and implementing technical SEO for your website, is not just important – it’s indispensable. So, as we delve deeper into this guide, remember that mastering some of these techniques is your key to unlocking better search rankings and user satisfaction.

Why is Technical SEO Important?

In an era where search engines are increasingly sophisticated, ensuring your website is visible to them is paramount. Optimizing your website’s infrastructure enhances its performance in organic search, directly impacting your search rankings and overall visibility. At the same time, and just as importantly a well-optimized site not only performs better in search engine results but also offers a superior user experience, which is critical for reducing bounce rates, click throughs and increasing customer satisfaction.

Technical SEO or the lack thereof, addresses key issues that can disrupt user experience, such as slow loading times, broken links and missing images.

Important aspects include:

- Fast-loading content, as users are likely to abandon sites that take too long to load.

- Improving website speed, which can significantly reduce bounce rates, leading to better rankings and higher conversion rates.

- Optimizing technical aspects like security and performance, which increases trust with users and helps avoid potential ranking penalties from search engines.

This holistic approach ensures that both users and search engines have a positive experience with your site, driving more traffic and ultimately leading to higher conversion rates.

In the following sections, we’ll explore the key components required for your website's technical SEO success and how you can implement them to achieve these benefits.

Key Components of Technical SEO

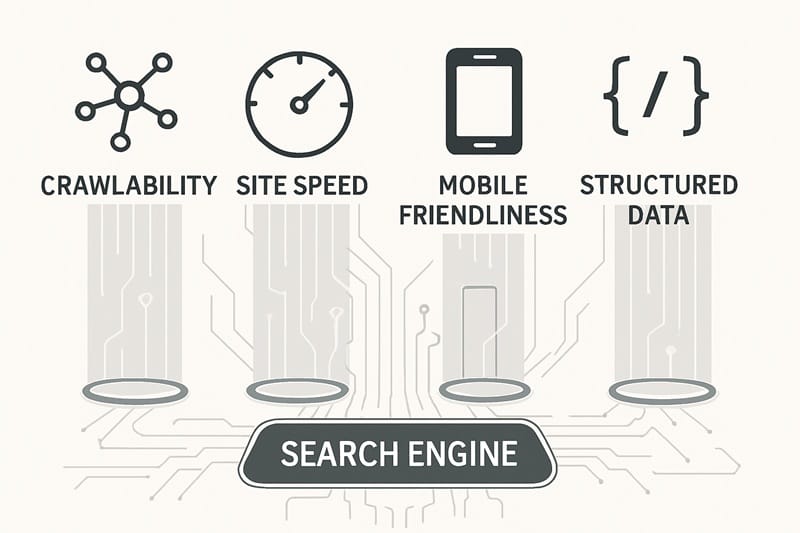

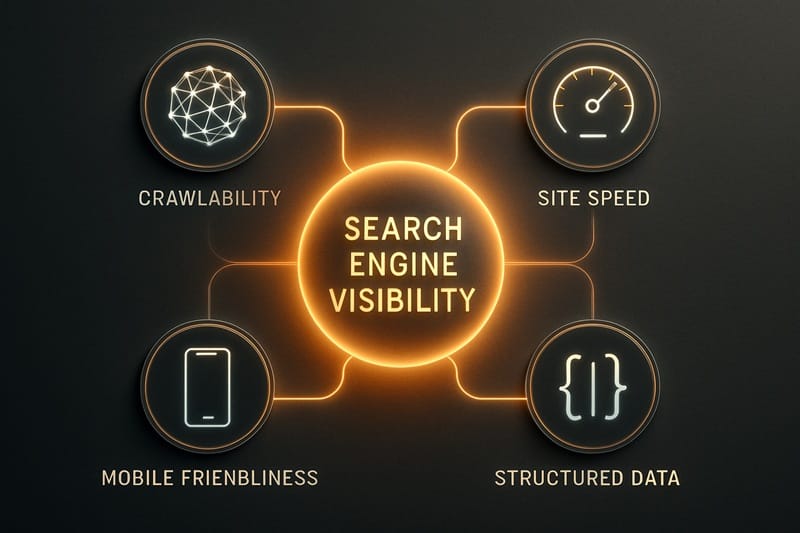

Technical SEO is a multifaceted discipline that focuses on improving various aspects of your website, such as its architecture, speed, mobile usability, and overall health. Each of these components is just as important to the next and neglecting one can impact the others. For instance, a well-structured site simplifies navigation, enhancing user experience and making it easier for search engines to crawl and index your content. This focus on consistency within in URL structure, helps by providing clarity and context to both users, web admins and search engines.

In the following subsections, we’ll delve deeper into three critical components of technical SEO: site speed and performance, mobile usability, and secure websites (HTTPS). Each of these elements plays a vital role in ensuring your website is optimized for both users and search engines.

Site Speed and Performance

Website speed is a confirmed ranking factor in Google Search, directly influencing how well your site performs in search engine results. At the same time, users are impatient. If your pages load slowly, they are likely to leave, leading to increased bounce rates. In fact, 53% of users will abandon a site that takes longer than three seconds to load.

Tools and techniques to improve site speed include:

- Using tools like Google PageSpeed Insights to assess your site’s speed and identify areas for improvement

- Minifying HTML and CSS

- Running Caching systems, such as Varnish and Redis.

- Reducing Image sizes.

- Limiting bloat from plugins, addons and themes.

- Simple & clean web development.

- Using Content Delivery Networks (CDNs) to enhance loading times

Improving your site’s speed can provide a competitive edge, enhancing both user experience and search engine rankings. Improving site speed and performance ensures that your website not only attracts visitors but also keeps them engaged.

You also need to consider your geographical location when considering site speed, there is no point having your Sydney business hosting in the US, because then the lag for Sydney customers will be considerable as all the traffic must traverse the ocean

Mobile Usability of my Site

With Google’s mobile-first indexing, the ranking of your website’s pages is primarily based on the mobile version of your site. In conjunction with this, our statistics now show that the majority of websites we perform SEO optimisation for, are now visited more by mobile devices than desktop devices. Therefore, a frustrating mobile experience can lead to negative SEO outcomes and reduced visibility.

To improve mobile usability and enable google, consider the following:

- Use tools like Google’s Lighthouse to identify mobile usability issues and get detailed insights for improvement.

- Invest in responsive web design to ensure your website is mobile-friendly.

- Regularly test your site using tools such as PageSpeed Insights and Google’s mobile friendly test to stay ahead of any mobile usability issues.

Mobile usability is not just about having a mobile version of your site, it’s about ensuring that the mobile experience is seamless and user-friendly on mobile devices. This includes optimizing site speed, ensuring easy navigation, and providing a responsive design that adapts to various screen sizes. Enhancing mobile usability improves user experience and boosts your site’s performance in search results.

Secure Websites (HTTPS)

HTTPS is the secure version of HTTP, designed to protect user information by encrypting the connection between the server and the browser. Google prioritizes websites with an SSL certificate in search rankings, making security a key ranking factor. Transitioning from HTTP to HTTPS requires acquiring a Secure Sockets Layer (SSL) certificate. This certificate is the key to securing your website. Free SSL/TLS certificates are available from providers like Let’s Encrypt.

HTTPS secures website connections and encrypts user data, which is crucial for an one hosting an ecommerce site or any other website what is handling sensitive information. Ultimately, implementing HTTPS is a must in todays climate, without it your website and/or business lacks credibility and trustworthiness for users and search engines.

Ensuring your site is secure is a crucial step in maintaining a website's technical SEO trust.

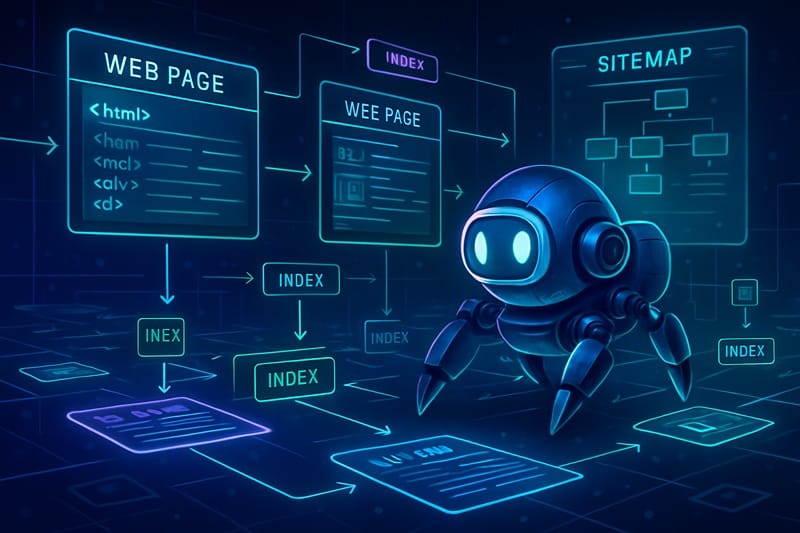

Optimizing Crawling and Indexing

Crawlability refers to the ability of search engines to access and navigate the content on your website. At all times through the day an automated robot, visits your website and crawls or follows the links to determine - what your business does, how it does it and what valuable information your site may have for the world. It is therefore imperative that you spend a great deal of time designing the structure and site architecture and ensure it allows for easy navigation and is split up appropriately, this benefiting both users and these search engine crawlers.

Efficient crawlability and indexability are the foundation of technical SEO strategies, ensuring that your content is accessible to search engines and users alike.

In the following subsections, we’ll explore two key tools for optimizing crawlability and indexability: the robots.txt file and XML sitemaps. These tools help guide search engine crawlers by providing specific instructions on which pages to crawl and index. Don't think these are optional to your website, properly configuring these elements enhances your site’s visibility, prevents garbage being crawled and increases your performance in search results.

The Robots.txt File

By default crawlers, crawl every file and image they come across on your site. The robots.txt file is a critical component of technical SEO in that it allows you to stipulate, to the crawler with specific instructions what pages to "skip" over and not add to its indexes. This txt file helps manage server load as well as index slots by blocking search engines from accessing less significant pages. However, always check your work as improper configuration can lead to unintentional blocking of essential pages, which can severely impact your site’s performance in search results for a long time. You can locate your robots.txt file at your homepage URL with ‘/robots.txt’ added and use Google’s robots.txt test tool in Google Search Console for testing.

Properly configuring your robots.txt file is essential for ensuring that search engines can efficiently crawl and index your site. Clear instructions to web crawlers optimize your site’s crawlability, ensuring important pages are accessible to search engines.

XML Sitemaps

XML sitemaps are invaluable tools for search engines to discover and index all relevant pages on your site. An XML sitemap contains a map of your entire site or a minimum a list of important pages. This is presented to the search engines such as Google helping them find and index the referenced pages, more efficiently. It is essential that the links in an XML sitemap point to live pages and are regularly updated to reflect the current site structure.

Maintaining an up-to-date XML sitemap provides a quick solution to providing valuable information that helps search engines understand and allowing them to effectively navigate and index your core site. However an unmaintained or incorrect sitemap file will do more damage than good, so regular, preferably automatic, updates to the XML sitemap are crucial for reflecting the changes in your site’s structure and content.

Internal Linking Strategies

Internal linking is a powerful SEO strategy that helps both users and search engines navigate your site. Well designed sites that include well structured internal linking mitigate the risk of orphaned content or posts, ensuring all pages are discoverable by visitors and search engines. Internal links and their labels are key to help Google understand your site’s structure, content strategy and determine the significance and context of each page. This is where on page SEO practices such as keyword research and keyword optimisation is key to ensure you are referencing content with the appropriate keywords.

To enhance your SEO efforts, add internal links pointing to high-priority content, guiding users and search engines to your key pages and high quality content.

Broken internal links, show a lack of structure and professionalism. All links should be periodically checked not only for broken links but also for current market relevance

Quality content, published and linked from your website’s homepage typically receive more link value, thereby, improving their chances of ranking higher in search results. The same is true for poor content, Googles algorithms are here for the users and weeding out poor content is always at the top of the list.

Try adding links to recent posts from existing content helps search engines index them faster and can prevent valuable pages from becoming orphan web pages, especially when there are multiple pages and individual pages involved.

Incorporating links to popular posts and cornerstone content signals their importance to search engines, driving additional traffic.

Ensuring a robust internal linking strategy is vital for enhancing your site’s SEO and user experience.

Handling Duplicate Content

Duplicate content refers to blocks of content that are either exactly the same or very similar across different URLs, which can negatively affect your site’s performance in search results. Tools like Semrush’s Site Audit tool can help identify duplicate content issues that may be impacting your site’s performance. When duplicate content issues arise, they dilute ranking signals, confuse attribution, and can lead to content being overlooked by search engines. Dont duplicate content across your sites and redirect any secondary domain names with 302 redirects to your primary website. Ensure all content is built up on the website that has the greater backlink profile.

Sometimes we must have duplicate content, in the following subsections, we’ll explore two effective strategies for handling this content: the noindex tag and canonical tags. These tools can help you manage duplicate content issues and ensure that your site maintains a strong SEO presence.

Noindex Tag

The noindex tag is one of those tools every SEO marketer has in their too bag that is rarely used. The no index tag is used to prevent specific pages from being indexed by search engines. This is particularly useful for non-essential pages, such as tag and category pages on your WordPress site, which ultimately, contributes to duplicate content issues if left unchecked.

Applying the noindex tag to WordPress category and tag pages enhances your site’s overall SEO performance by ensuring only relevant and high-quality content is indexed.

Using the noindex tag helps search engines focus on the most important pages of your site, improving your site’s visibility and ranking potential. It’s a simple yet effective way to manage duplicate content and maintain a clean, optimized site structure.

Canonical Tags

Canonical tags are another essential tool for managing duplicate content. The Canonical Tag, indicates to search engines the preferred version of a page. When multiple URLs have similar content, canonical tags consolidate ranking signals, ensuring that search engines prioritize the correct version. This process, known as canonicalization, prevents the dilution of ranking signals and helps maintains a strong SEO presence.

By using several canonical tags, you guide search engines to the most authoritative version of your content, enhancing the rank of that content in search results. This strategy is particularly useful for ecommerce sites with multiple product pages that may have similar content. Implementing canonical tags effectively ensures that search traffic is focused and impactful, avoiding the pitfalls of duplicate content.

Structured Data Implementation - Schema Markup

Structured data is a type of markup that provides search engines with a more detailed picture of the content of a page to understand and index it more accurately. By adding schema markups, you can enhance your content’s visibility in search results through rich snippets. These rich snippets, provide more detailed information about your page. Different formats for structured data include JSON-LD, Microdata, and RDFa, with JSON-LD being the preferred choice due to its simplicity and effectiveness.

Comparing page performance before and after you add structured data showcases its impact. Tools like Google’s Structured Data Markup Helper and the Yoast SEO plugin can assist in implementing structured data on your site.

Additionally, the Rich Results Test can be used to validate structured data and preview its appearance in search results. Implementing structured data significantly enhances your site’s SEO performance and user experience, especially when utilizing keyword rich anchor text.

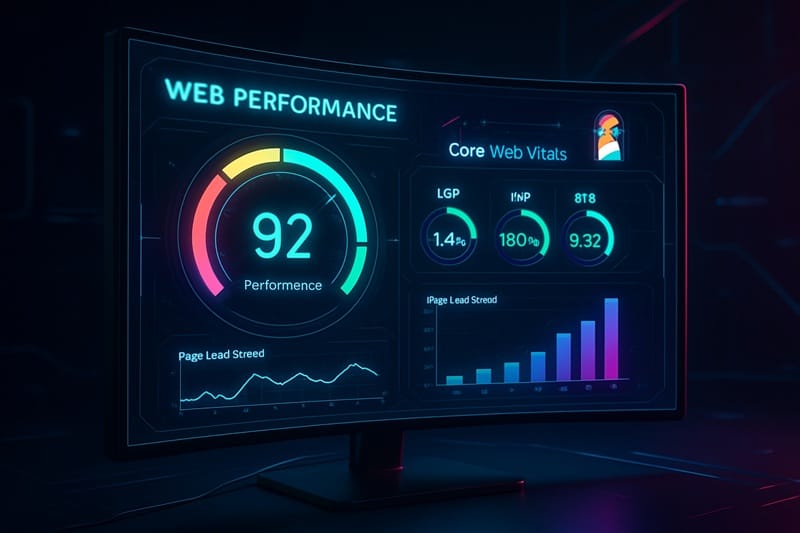

Core Web Vitals Optimization

Core Web Vitals are crucial metrics that Google uses to evaluate user experience, including loading speed, interactivity, and visual stability. To maintain optimal performance, focus on these metrics:

- Largest Contentful Paint (LCP): Keep at 2.5 seconds or less to ensure optimal loading performance.

- Interaction to Next Paint (INP): Keep under 200 milliseconds to ensure a responsive user experience.

- Cumulative Layout Shift (CLS): Aim for a score of 0.1 or less to maintain visual stability during page loads.

Poor Core Web Vitals negatively affect both user experience and Google traffic. It is essential to optimise these metrics as best you can to provide a professional platform for you viewers and prove to the search engines you are a professional small business.

Regular site audits combined with tools like Google Search Console can help monitor and optimize Core Web Vitals.

Advanced Technical SEO Techniques

Advanced technical SEO techniques can transform a technically sound site into a traffic powerhouse.

Optimizing elements like server response times and reducing render-blocking scripts can further enhance your site’s technical SEO and speed, yielding significant improvements in search rankings and organic traffic.

In the following subsections, we’ll explore two advanced techniques: JavaScript SEO and log file analysis. These techniques address specific challenges and provide insights into how search engines and search queries interact with your site, enabling you to optimize it further for better performance and visibility.

JavaScript SEO

JavaScript SEO presents unique challenges, as search engines like Googlebot often see a blank page while users view a functional layout. JavaScript frameworks require specialized strategies to ensure proper indexing and ranking. Dynamic rendering can be an effective solution, allowing search engines to access the content of JavaScript-heavy pages. Pre-rendering techniques can also help make your content accessible to web crawlers.

Addressing the technical gap between JavaScript-heavy sites and search engine crawlers, providing search engines work improves your site’s visibility and performance in search results. Implementing these strategies, including browser caching, ensures that your site remains accessible and optimized for both users and search engines, enhancing its overall SEO health.

Log File Analysis

Analysing server logs provides valuable insights into real bot behaviour on your website. Log file analysis helps validate improvements in URL crawl priority, enhancing search engine visibility. This process is critical for understanding how search engine crawlers interact with your site, identifying opportunities for optimization.

Regular log file analysis allows SEO professionals to view, page load times, crawl paths and visitor experiences. Analysing these logs allow admins, to better optimize their site’s structure for both visitor and crawler access and performance. This combined with carefully documenting the load time of each, provides a complete and deeper understanding of your site’s technical markers and helps ensure that every aspect of your site is at its best and is easily indexed by crawlers.

Regular Technical SEO Audits

Regular technical SEO audits are essential for maintaining competitiveness and ensuring consistent site performance. These audits provide actionable insights that help improve search performance and foster trust with search engines and users. Monitoring technical SEO health regularly helps identify and fix issues as they arise, ensuring optimal SEO performance.

Tools like Google Search Console and Semrush’s Site Audit tool can be used to conduct technical SEO audits effectively. Regular audits of Core Web Vitals are also crucial for identifying and rectifying performance issues over time. Additionally, a free tool and the URL inspection tool can assist in streamlining this process.

Maintain a set and rigorous schedule for SEO audits to ensure that your site remains optimized and competitive in the ever-evolving digital landscape.

Summary

Mastering technical SEO is a journey that involves understanding and implementing various components to enhance your site’s performance and visibility. At the same time it is all common marketing sense. From optimizing site speed and mobile usability to ensuring secure connections and addressing duplicate content, each aspect plays a crucial role in your site’s overall SEO health and shows as professionalism to your visitors. Be sure to perform regular audits and spend time on advanced techniques like the JavaScript SEO and log file analysis to further refine your strategy, ensuring that your site remains competitive and user-friendly into the future.

In conclusion, technical SEO focuses on the core foundation of your website, upon which all other SEO efforts are built. By focusing on these key areas, you can create a robust and optimized site that not only ranks well in search results but also provides an exceptional user experience. As you implement these strategies, remember that the digital landscape is constantly evolving, and staying ahead of the curve is key to maintaining your site’s success. Keep learning, keep optimizing, and watch your site thrive in the competitive world of 2025.

Frequently Asked Questions

What are the 4 types of SEO?

The four types of SEO you should know are on-page, off-page, technical, and local SEO. Each type is essential for enhancing your website's visibility and ranking in search engines.

What is technical SEO, and why is it important?

Technical SEO is all about fine-tuning your website's backend so search engines can easily navigate it. It’s crucial because a well-optimized site boosts your search rankings and enhances user experience.

How does site speed impact SEO?

Site speed directly affects your SEO because slower sites can increase bounce rates, click throughs and hurt your rankings. Speeding up your site, not only enhance user experience but also boost your chances of climbing higher in search results.

What role does mobile usability play in SEO?

Mobile usability is key for SEO because Google prioritizes and crawls, mobile versions of sites in its rankings. Therefore it is essential to have a seamless mobile website experience. Boosting your visibility and keeping users engaged, so make sure your design is responsive.

How do canonical tags help with duplicate content?

Canonical tags help by indicating the main version of a page, which consolidates ranking signals and prevents confusion over duplicate content. This way, search engines know exactly which URL to prioritize.